Why object oriented codebases are harder to refactor

Software engineering doctrines (object oriented, functional, etc.) act more like religions than anythings else. There are some good ideas in each of those design philosophies, but odds are if you work in enterprise software, you’ll be at one point subjected to the orthodox church of objected oriented (OO) programming: hardcore GangOfFour patterns.

The ultimate incarnation of this programming style ends with breaking up and separating logic between with boundaries between everything, recursively. This is usually done in the name of something vaguely good, like “modularity”, “decoupling” or “abstraction”. Taken to absurdity, you end up with codebases looking like FizzBuzz Enterprise Edition ™, where you have vast seas of code where nothing ever happens except pointing you to other places, and only once in a while you actually happen upon a sparse island of business logic.

That said, even the milder forms of orthodox OO design have real problems:

First, it’s bad for performance. As Mike Acton shows in his 2014 talk, OO codebases are generally broken into atomic structures that talk to each other through interfaces. This means almost every operation fetches pointers, sometimes several[footnote]You chase a pointer to the interface class, which chases a pointer to the instance method, etc.[/footnote], each of which do a round-trip to RAM (costing hundreds of CPU cycles). In comparison, codebases that are more “tightly coupled to their data” like those using data-oriented are several orders of magnitude faster.

Some will retort it’s not the end of the world: CRUD apps aren’t performance critical. I disagree. This mentality is exactly what leads to modern software being so bloated and slow.

Second, it’s harmful for the ultimate stated goal of object oriented codebases: maintainability.

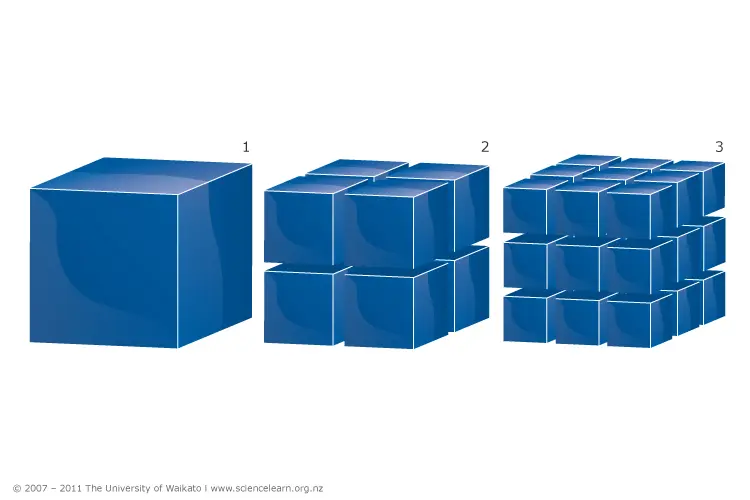

The OO doctrine dictates that we have one unit test for every public method in every class in a codebase. This makes some sense: you should only test interfaces. The problem comes when we break modules into sub-modules, we drastically increase the surface to be tested:

More surface to test means more tests. Tests are nice, but they tend to freeze whatever they’re testing in place: it’s normal to see as much test code as there is, you know, code. The workload to refactor is simply much larger if you have more tested surface. This is true even if you mock everything, because the tests still depend on the interface which tends to change under refactoring.

Moreover while all the units are tested, you still need to test how the system works. In OO, this is usually called “integration tests”, but when you keep design more monolithic (see the cube on the left), it’s just “the tests”. It’s perfectly fine for many kinds of systems to only have integration tests.

Here’s an example of the common development pattern from a place I’ve worked at before. Everyone involved is a “senior” dev (4+ year experience):

Business requirements are a service exposes an API to other through JSON.

The original dev includes a data cache for performance that updates itself automatically once in a while. This touches the database. He submits it for review. The entire thing is around 700 lines of python, half of which being tests.

“This breaks the Single Responsibility principle!” Cries one senior dev in review. It should be broken up with a cache class, a connector class to touch the database, and a web interface class to do the JSON and HTTP stuff. Reviewer #2 agrees.

Two more weekly dev cycles go by. The original service goes from 700 to over 3500 lines, with mocked unit tests for each class and integration tests between classes. The original tests are now called end-to-end tests. Functionality has not changed. No additional bugs have been found.

Months later, making any addition to that service now takes an additional day of work due to touching so much more code.

Needless to say, I’m not at that place anymore.

What you should be doing

Don’t drink the kool-aid.

Most design philosophies have good parts and bad parts. OO has good ideas. Functional programming also has good ideas (pure functions are great!) but orthodox functional design also [footnote]Luckily, most of us won’t have to deal with the orthodox functional programmers, because they’re safely locked away in academia[/footnote]. Data oriented design has good ideas. Procedural code is all you need most of the time.

When it comes to tests, start with behavior tests. Add tests for each bug you fix and each behavior you add to the system. If you write unit tests for development, delete them before merging them into the master codebase.

If at all possible, use programming languages that don’t have strong opinions on which design paradigm you should use.