A decade later, Reddit's comment sorting still fails to do its job

Reddit started sorting comments by a method they call “best”[footnote]I won’t stop putting quotation marks around it. It’s not “the best” scoring method and having it call itself that makes it too big for its britches and makes me want to take it down a notch.[/footnote] around a decade ago.

The main problem it tries to solve, according to the blog post, is that the earliest comments tend to stay at the top for any reddit post. The “Best” sorting method fails at this objective miserably:

Today we’ll go over why it’s failing and how to fix it.

There’s a difference between a metric and a ranking

Lets say we have a score to each comment. When you open the comments on a post, they appear in a descending order of their score (best score at the top, lowest at the bottom). Evan Miller designed the “best” scoring method and laid out his reasoning in this blog post. He shows why naive scores are bad:

Raw Score (upvotes - downvotes) is bad because a comment with many votes might have a large score but a relatively low % of users who upvoted it. A comment with 4500 upvotes and 4000 downvotes would be scores above a comment with 400 upvotes and 0 downvotes.

Average Rating (upvotes / [upvotes + downvotes]) is bad for the opposite reason – posts with very few votes will have either a perfect or terrible score (due low sample size and sheer luck) and the overall ranking will vary wildly. Posts with many votes will tend toward their real score, which is generally less than perfect, so they won’t be at the top.

Reddit’s “Best” scoring method calculates the 95% statistical lower confidence bound of the upvote/downvote ratio. Read his blog post if you want the math. Better yet, I pulled up the actual reddit source code for you to see how it’s calculated.

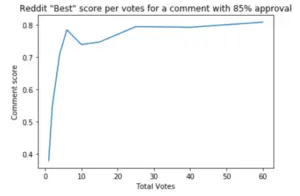

If you want to see what the “best” score looks like, I plotted the output for a post with a 85% upvote ratio from 0 to 100 votes:

We see that the score does converge to its true score, but it takes about 25 votes for it to get there. Below that number of votes, the comment has an artificially lower score due the best formula puinishing small sample sizes.

Feedback Loops

Here’s an observation: A comment sorted at the top gets seen by more people. But the converse is also true – comments sorted at the bottom won’t get seen by many readers, if any.

Feedback loops aren’t mentionned in Evan’s blog post, but they were on the mind of the designers if they wanted to avoid the “early comments stick at the top” problem. This is still a problem. Almost all comments posted after a comment has reached critical mass in the top ranking will have a hard time getting the ~25 with their score by simply never gathering a sufficient sample size of votes to have a chance to be read by anyone.

Here’s some more sources to convince you: the paper I linked in the introduction finds on average 30% of discussion in a reddit post is under a single top-level comment. Other researchers find that manipulating the first vote on the post to be an upvote/downvote has a large effect on final score. The popular r/AmItheAsshole subreddit ran its own little study and found that running all new posts in “contest” mode leads to better discussion quality.

The effect of this feedback loop is that the distribution of votes on comments follow a rough power-law distribution, even through the distribution of quality of comments clearly doesn’t. This means the discussion quality on reddit is worse than it should be.

A metric is not a ranking method

We discussed what metric to use for ranking here, but let’s remind ourselves that “descending ordering” is just one way to rank a list of scores among many.

The best way to fix a feedback loop problem like this is by using an exploration-exploitation framework. There are plenty of ways to do this, all of them giving new comments a chance while keeping the statistically “best” comments mostly at the top. This blog explored the topic and finds the Thompson sampling method performs best.